Artificial information filtering#

In simple terms the bitinformation is retrieved by checking how variable a bit pattern is. However, this approach cannot distinguish between actual information content and artifical information content. By studying the distribution of the information content the user can often identify clear cut-offs of real information content and artificial information content.

The following example shows how such a separation of real information and artificial information can look like. To do so, artificial information is artificially added to an example dataset by applying linear quantization. Linear quantization is often applied to climate datasets (e.g. ERA5) and needs to be accounted for in order to retrieve meaningful bitinformation content. An algorithm that aims at detecting this artificial information itself is introduced.

import xarray as xr

import xbitinfo as xb

import numpy as np

/home/docs/checkouts/readthedocs.org/user_builds/xbitinfo/conda/latest/lib/python3.12/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

Loading example dataset#

We use here the openly accessible CONUS dataset. The dataset is available at full precision.

ds = xr.open_zarr(

"s3://hytest/conus404/conus404_monthly.zarr",

storage_options={

"anon": True,

"requester_pays": False,

"client_kwargs": {"endpoint_url": "https://usgs.osn.mghpcc.org"},

},

)

# selecting water vapor mixing ratio at 2 meters

data = ds["ACSWDNT"]

# select subset of data for demonstration purposes

chunk = data.isel(time=slice(0, 2), y=slice(0, 1015), x=slice(0, 1050))

chunk

<xarray.DataArray 'ACSWDNT' (time: 2, y: 1015, x: 1050)> Size: 9MB

dask.array<getitem, shape=(2, 1015, 1050), dtype=float32, chunksize=(2, 350, 350), chunktype=numpy.ndarray>

Coordinates:

lat (y, x) float32 4MB dask.array<chunksize=(350, 350), meta=np.ndarray>

lon (y, x) float32 4MB dask.array<chunksize=(350, 350), meta=np.ndarray>

* time (time) datetime64[ns] 16B 1979-10-31 1979-11-30

* x (x) float64 8kB -2.732e+06 -2.728e+06 ... 1.46e+06 1.464e+06

* y (y) float64 8kB -2.028e+06 -2.024e+06 ... 2.024e+06 2.028e+06

Attributes:

description: ACCUMULATED DOWNWELLING SHORTWAVE FLUX AT TOP

grid_mapping: crs

integration_length: month accumulation

long_name: Accumulated downwelling shortwave radiation flux at top

units: J m-2Creating dataset copy with artificial information#

Functions to encode and decode#

Artificial information filtering#

In simple terms the bitinformation is retrieved by checking how variable a bit pattern is. However, this approach cannot distinguish between actual information content and artifical information content. By studying the distribution of the information content the user can often identify clear cut-offs of real information content and artificial information content.

The following example shows how such a separation of real information and artificial information can look like. To do so, artificial information is artificially added to an example dataset by applying linear quantization. Linear quantization is often applied to climate datasets (e.g. ERA5) and needs to be accounted for in order to retrieve meaningful bitinformation content. An algorithm that aims at detecting this artificial information itself is introduced.

import xarray as xr

import xbitinfo as xb

import numpy as np

Loading example dataset#

We use here the openly accessible CONUS dataset. The dataset is available at full precision.

ds = xr.open_zarr(

"s3://hytest/conus404/conus404_monthly.zarr",

storage_options={

"anon": True,

"requester_pays": False,

"client_kwargs": {"endpoint_url": "https://usgs.osn.mghpcc.org"},

},

)

# selecting water vapor mixing ratio at 2 meters

data = ds["ACSWDNT"]

# select subset of data for demonstration purposes

chunk = data.isel(time=slice(0, 2), y=slice(0, 1015), x=slice(0, 1050))

chunk

<xarray.DataArray 'ACSWDNT' (time: 2, y: 1015, x: 1050)> Size: 9MB

dask.array<getitem, shape=(2, 1015, 1050), dtype=float32, chunksize=(2, 350, 350), chunktype=numpy.ndarray>

Coordinates:

lat (y, x) float32 4MB dask.array<chunksize=(350, 350), meta=np.ndarray>

lon (y, x) float32 4MB dask.array<chunksize=(350, 350), meta=np.ndarray>

* time (time) datetime64[ns] 16B 1979-10-31 1979-11-30

* x (x) float64 8kB -2.732e+06 -2.728e+06 ... 1.46e+06 1.464e+06

* y (y) float64 8kB -2.028e+06 -2.024e+06 ... 2.024e+06 2.028e+06

Attributes:

description: ACCUMULATED DOWNWELLING SHORTWAVE FLUX AT TOP

grid_mapping: crs

integration_length: month accumulation

long_name: Accumulated downwelling shortwave radiation flux at top

units: J m-2Creating dataset copy with artificial information#

Functions to encode and decode#

# Encoding function to compress data

def encode(chunk, scale, offset, dtype, astype):

enc = (chunk - offset) * scale

enc = np.around(enc)

enc = enc.astype(astype, copy=False)

return enc

# Decoding function to decompress data

def decode(enc, scale, offset, dtype, astype):

dec = (enc / scale) + offset

dec = dec.astype(dtype, copy=False)

return dec

Transform dataset to introduce artificial information#

xmin = np.min(chunk)

xmax = np.max(chunk)

scale = (2**16 - 1) / (xmax - xmin)

offset = xmin

enc = encode(chunk, scale, offset, "f4", "u2")

dec = decode(enc, scale, offset, "f4", "u2")

Comparison of bitinformation#

# original dataset without artificial information

orig_info = xb.get_bitinformation(

xr.Dataset({"w/o artif. info": chunk}),

dim="x",

implementation="python",

)

# dataset with artificial information

arti_info = xb.get_bitinformation(

xr.Dataset({"w artif. info": dec}),

dim="x",

implementation="python",

)

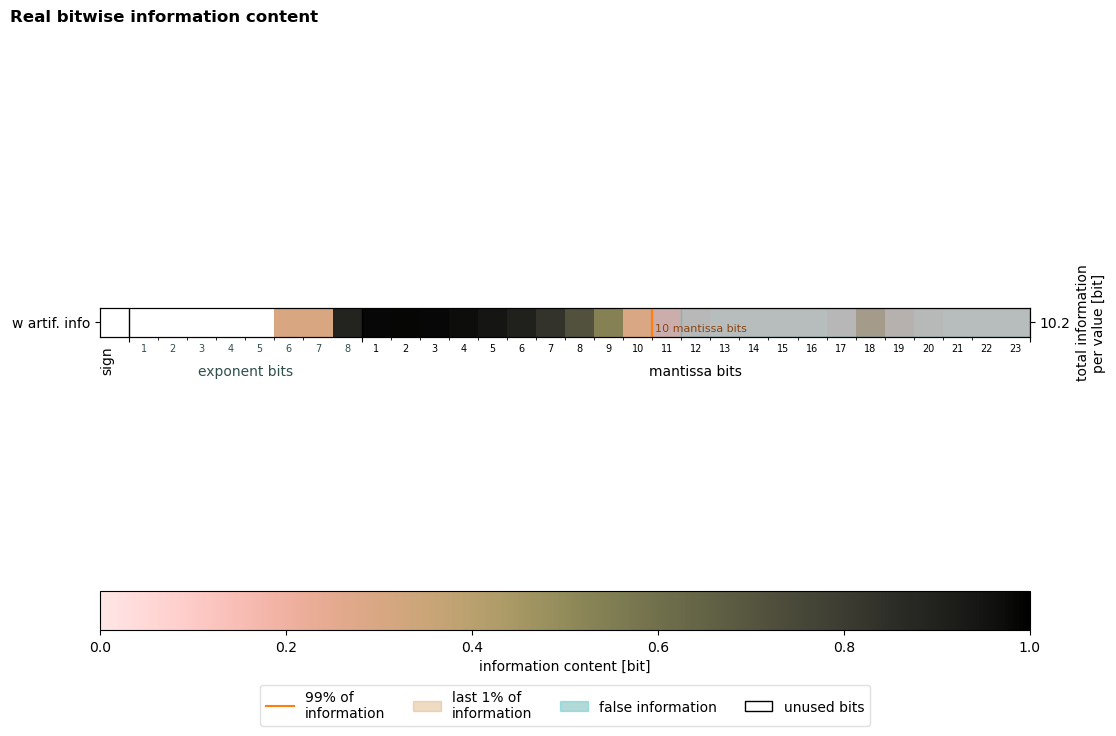

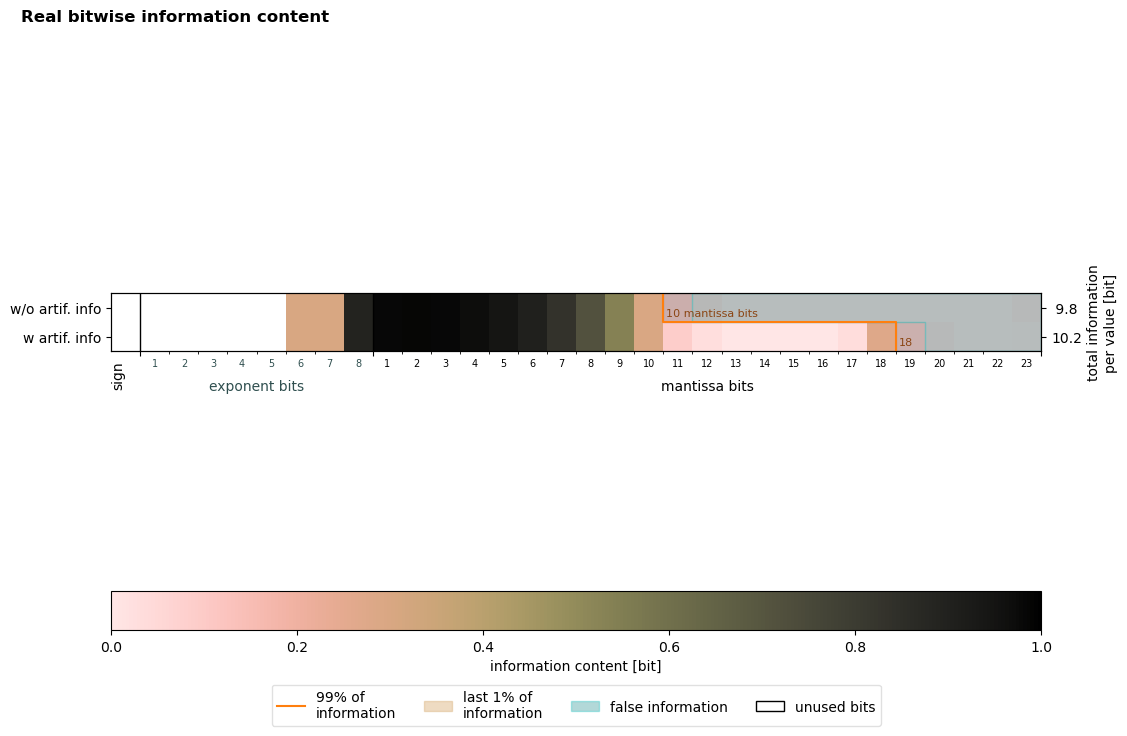

# plotting distribution of bitwise information content

info = xr.merge([orig_info, arti_info])

plot = xb.plot_bitinformation(info)

0%| | 0/1 [00:00<?, ?it/s]

Processing var: w/o artif. info for dim: x: 0%| | 0/1 [00:00<?, ?it/s]

Processing var: w/o artif. info for dim: x: 100%|██████████| 1/1 [00:05<00:00, 5.64s/it]

Processing var: w/o artif. info for dim: x: 100%|██████████| 1/1 [00:05<00:00, 5.64s/it]

0%| | 0/1 [00:00<?, ?it/s]

Processing var: w artif. info for dim: x: 0%| | 0/1 [00:00<?, ?it/s]

Processing var: w artif. info for dim: x: 100%|██████████| 1/1 [00:05<00:00, 5.56s/it]

Processing var: w artif. info for dim: x: 100%|██████████| 1/1 [00:05<00:00, 5.56s/it]

The figure reveals that artificial information is introduced by applying linear quantization.

keepbits = xb.get_keepbits(info, inflevel=[0.99])

print(

f"The number of keepbits increased from {keepbits['w/o artif. info'].item(0)} bits in the original dataset to {keepbits['w artif. info'].item(0)} bits in the dataset with artificial information."

)

The number of keepbits increased from 10 bits in the original dataset to 18 bits in the dataset with artificial information.

In the following, a gradient based filter is introduced to remove this artificial information again so that even in case artificial information is present in a dataset the number of keepbits remains similar.

Artificial information filter#

The filter gradient works as follows:

It determines the Cumulative Distribution Function(CDF) of the bitwise information content

It computes the gradient of the CDF to identify points where the gradient becomes close to a given tolerance indicating a drop in information.

Simultaneously, it keeps track of the minimum cumulative sum of information content which is threshold here, which signifies at least this much fraction of total information needs to be passed.

So the bit where the intersection of the gradient reaching the tolerance and the cumulative sum exceeding the threshold is our TrueKeepbits. All bits beyond this index are assumed to contain artificial information and are set to zero in order to cut them off.

You can see the above concept implemented in the function get_cdf_without_artificial_information in xbitinfo.py

Please note that this filter relies on a clear separation between real and artificial information content and might not work in all cases.

xb.get_keepbits(

arti_info,

inflevel=[0.99],

information_filter="Gradient",

**{"threshold": 0.7, "tolerance": 0.001}

)

<xarray.Dataset> Size: 20B

Dimensions: (inflevel: 1)

Coordinates:

dim <U1 4B 'x'

* inflevel (inflevel) float64 8B 0.99

Data variables:

w artif. info (inflevel) int64 8B 10With the application of the filter the keepbits are closer/identical to their original value in the dataset without artificial information. The plot of the bitinformation visualizes this:

plot = xb.plot_bitinformation(arti_info, information_filter="Gradient")